The internet has been abuzz for the past few days with a new climate forecasting study by Terence Mills, a well-known statistician at Loughborough University in the UK. The report is highly technical and reads as something of a mathematics textbook on how to design Box-Jenkins type ARIMA forecasting models for climate data, so statistical novice readers be warned. But there’s also an important takeaway that climate scientists, policymakers, and laymen should heed.

Mills’ larger point is that the earth’s climate is an exceedingly complex system. Making climate forecasts about temperature data, atmospheric carbon, sea level rise, and the sort accordingly requires the use of statistical methods that are appropriate for handling complex time series data. The relevant forecasting techniques involve mathematical tools that have been refined over many decades of ever-advancing computer capabilities. The aforementioned Box-Jenkins approach is now something of the industry standard for complex system time series forecasting in multiple fields of applied mathematics, science, finance, and economics. It has many modifications and can be carefully tailored to account for things like seasonality in a data set, or used to tease out specific internal patterns that overlay a larger time series.

The product of these approaches is highly useful to forecasters though. ARIMAs and similar moving average models utilize a historical data set to fit and project a trend line forward from the present by a specified number of days/months/years. The resulting forecasts are probabilistic, that is to say they come with upper and lower confidence boundaries. They also carry the added benefit of endogenizing the historical data of the time series that they are derived from, which helps to work around the problem of having to make sometimes-questionable and/or discretionary assumptions about input variables that are causally complex, difficult to isolate, and interrelated to one another in addition to the overall trend. With all of that in mind, an ARIMA technique, undergoing a few appropriate modifications, would appear to be an extremely useful tool to adapt to climate forecasting.

It might therefore come with some surprise to learn that many long term global temperature “forecasts” by climatologists actually do not actually employ these standard forecasting tools. There are a few exceptions, including several papers by Mills that expand upon the techniques in his new report. But compared to most other applied mathematics, climatology as a whole is still very much in the statistical dark ages.

In avoiding adaptations from modern forecasting techniques, climatology has instead shown a marked preference for an older way of making predictions that is not only, arguably, less reliable on the whole but also prone to overstating the precision of its claims about distant future events. Many of the global temperature “forecasts” in the climatology literature are in fact chained to highly specific assumptions about atmospheric carbon release. They start not in the historical data set as a whole, but by attempting to isolate the theorized effects of carbon dioxide on atmospheric temperature, and proceed from a calculated assumption of what specific levels of additional carbon release will supposedly do to the climate model many decades from now. There is some merit in asking that question as a point of scientific inquiry, but it also has a significant drawback when it forms the basis of an entire model for a complex climate system. If the underlying initial assumption about carbon is wrong – that is to say, if it errs and either understates or overstates the cumulative effects of carbon on atmospheric temperatures – then the entire model loses its predictive ability, irrespective of what the historical temperature record says.

The statistical forecasting approach proposed by Mills is not nearly as susceptible to this flaw, because it is not premised upon an attempt to isolate a specific causal component from an exceedingly complex system. Statistical forecasting, as a result, also tends to be more cautious about its predictions on the whole precisely because it allows for a great deal of future uncertainty.

Hydraulic Climatology?

The present discussion of statistical techniques brings up an interesting point for historical comparison. Like the earth’s climate, the economy is a marvelously complex system. Causal directions do exist in economic activity, but they are also notoriously difficult to isolate and practically impossible to plan. Most economists have long accepted the reality of these difficulties, particularly in the wake of the fall of the Soviet Union and other disastrous 20th century experiments with central planning. But this was not always the case, even in countries that stopped short of the more extreme forms of what they thought to be a properly designed economy. Mid-20th century Keynesianism offers us an illustrative example in which leading economists of the day believed they had figured out most of the causal mechanisms of the macroeconomy. They translated theory into prescriptive policies, each premised upon an assumption that specific actions by the government would yield specific – and predictable – results, as measured in economic growth.

Was the economy growing too slowly? Cut taxes and/or increase government spending, and growth would accelerate! Was the economy expanding too fast? Raise interest rates to curtail credit expansion and lending! Was the economy headed for a recession? Deficit spend to reverse course! And perhaps most infamously, was unemployment too high? Be willing to tolerate a higher level of inflation and it will go away, or so they promised. The tradeoffs in all of these areas were said to be exploitable and predictable with finely tuned precision. Unfortunately for these prescriptive economic designers of the last mid-century, the outcomes of their policies seldom worked out as projected.

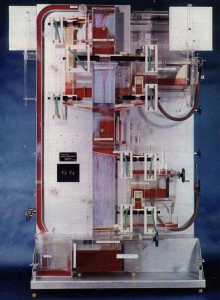

Initially this did little to deter the planning impulse. In one rather humorous example, economist Bill Phillips even built a rudimentary hydraulic computer that would supposedly “model” the entire economic system of a country. The resulting machine, called the MONIAC, was intended to be an instructional device for Keynesian policymaking – a visual representation of the tools that they believed they could use to isolate and control all the components of a complex system. The device was essentially an assemblage of fish tanks connected by tubes and a pump that pushed fluids through it in a circular pattern. They operated the machine with a series of valves and levers, which would control the flow of water into different tanks to correspond to different policies. Taxes could be raised and lowered. New “spending” could be added with the release of more water. The money supply could be loosened or tightened and so forth, with the predicted “effect” being seen in changes to the overall rate of flow, or the resulting level of economic output.

Economics has fortunately moved well beyond the days of the hydraulic MONIAC machine and its underlying assumptions about economic causality. We have since learned – sometimes the hard way – that the economy is simply too complex to causally map out as a precise system of policy levers that could shape production and commerce with reliable and predictable outcomes. Forecasting took a similar turn as well, moving away from drawing out trend lines that were backfilled to fit the predictions of overly simplified causal relationships. In its place, economics adopted the statistical conventions of modern forecasting tools with their associated cautions against making long range predictions and claims of precision.

In a strange way, modern climatology shares much in common with the approach of 1950s Keynesian macroeconomics. It usually starts with a number of sweeping assumptions about the relation between atmospheric carbon and temperature, and presumes to isolate them to specific forms of human activity. It then purports to “predict” the effects of those assumptions with extraordinarily great precision across many decades or even centuries into the future. It even has its own valves to turn and levers to pull – restrict carbon emissions by X%, and the average temperature will supposedly go down by Y degrees. Tax gasoline by X dollar amount, watch sea level rise dissipate by Y centimeters, and so forth. And yet as a testable predictor, its models almost consistently overestimate warming in absurdly alarmist directions and its results claim implausible precision for highly isolated events taking place many decades in the future. These faults also seem to plague the climate models even as we may still accept that some level of warming is occurring.

The failure of central planning broke the MONIAC style of Keynesian lever-pulling for economic analysis and forecasting in the last century. One can only hope that the modernization of climatology will include a greater receptivity to the statistical forecasting techniques that have seen widespread use and success in other scientific fields. With the benefit of sounder forecasting tools, climatology might also finally adopt a greater level of scientific caution when making sweeping predictive assertions about highly complex and highly uncertain future events.